October 3, 2025

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Suspendisse varius enim in eros elementum tristique. Duis cursus, mi quis viverra ornare, eros dolor interdum nulla, ut commodo diam libero vitae erat. Aenean faucibus nibh et justo cursus id rutrum lorem imperdiet. Nunc ut sem vitae risus tristique posuere.

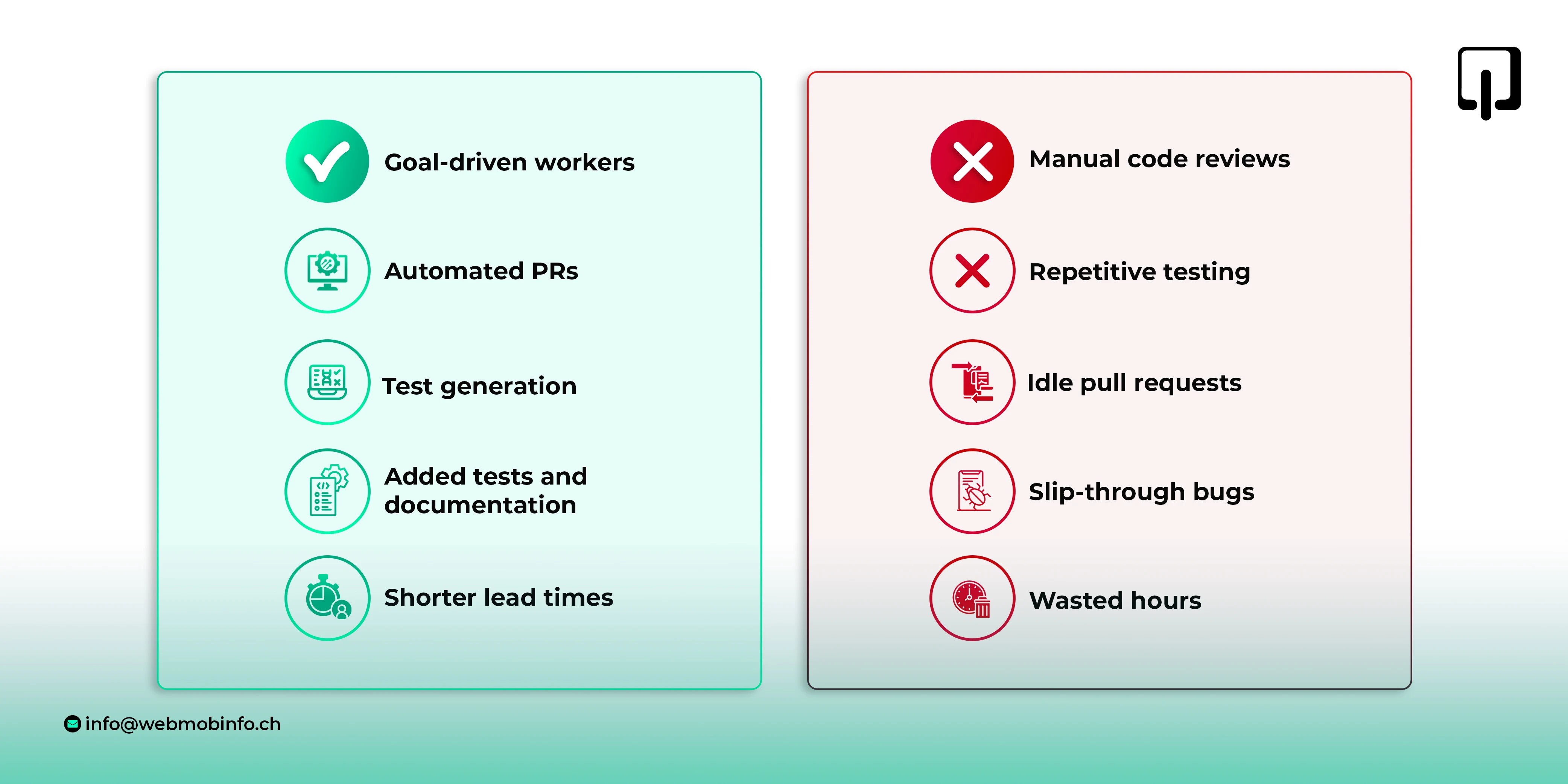

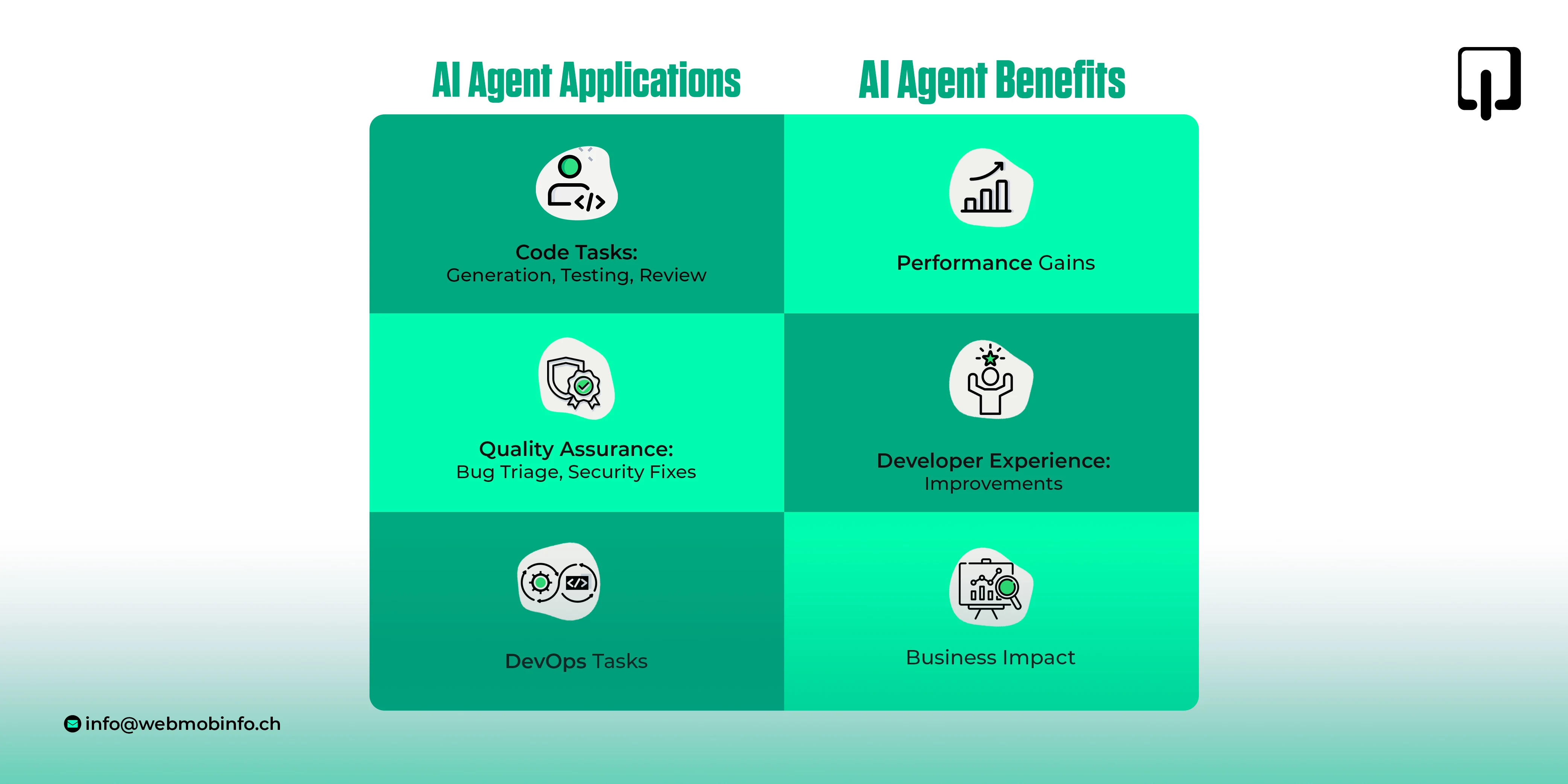

Software delivery moves quickly, yet teams still lose hours to repetitive tasks that feel the same every week. Pull requests sit idle, tests lag changes, and minor bugs slip through, costing real money later. You know what? This is where AI agents for software development earn attention, not hype.

An AI agent is not a chatbot that writes a single code snippet and leaves. It is a goal-driven worker who can plan, utilize tools, reflect on feedback, and try again. It can submit a pull request, add tests, and document the change. For a CTO or an engineering leader, that means shorter lead times, fewer blockers, and a more consistent delivery rhythm. It is not magic. It is better coordination across dozens of small tasks that slow teams down.

In this guide, we explain what AI agents in software development are, how they work, where they help, and how to adopt them without risking your codebase. We also walk through how to pick an AI agent development company if you prefer help over building alone. The goal is simple. After reading, you will know where to start and how to measure results with plain numbers.

AI agents are software systems that read goals, plan steps, utilize developer tools, and act within your code environment with varying levels of autonomy. They are part assistant, part worker. The reason they matter now is twofold. First, models reached a quality where reasoning over multi-file codebases and long task chains is reliable enough for day-to-day support. Second, integrations with IDEs, CI/CD, and issue trackers are mature enough to make AI agents useful without requiring rework of your existing stack.

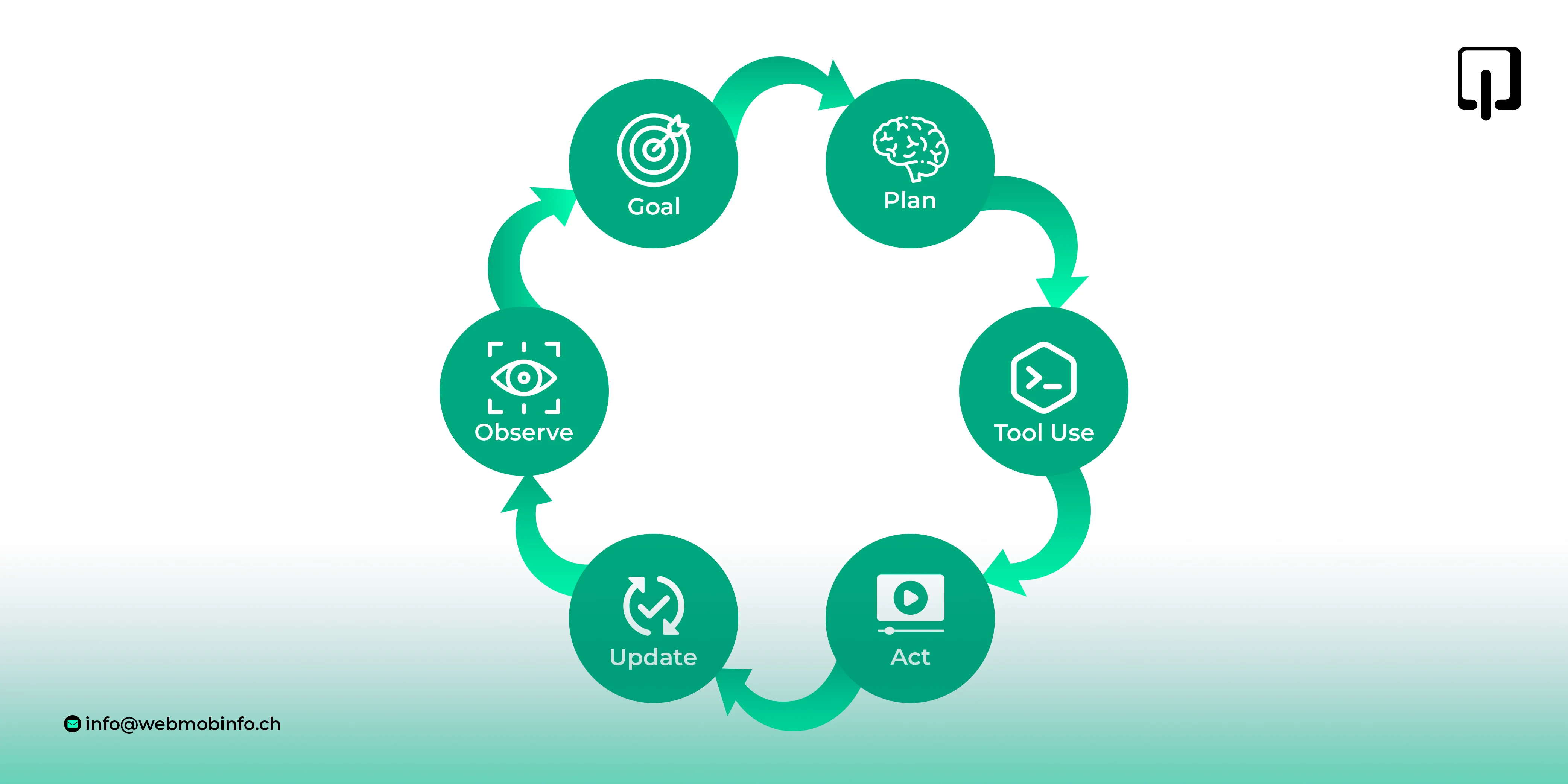

AI agents in software development are software systems that can understand a goal, plan steps, invoke tools such as Git or a test runner, and take actions like editing code, running checks, or opening a pull request. They can track context across steps and learn from feedback. In short, autonomous software agents can perform multi-step tasks with minimal guidance or even without human intervention at every step.

A typical LLM prompt gives you a one-off answer. An agent runs a loop. It plans, calls tools, observes results, updates the plan, and continues until it reaches the goal or hits a rule that stops it. That loop is what makes AI agents in software development feel more like a teammate than a chatbot.

Typical environments include IDEs, CI/CD, repositories, and issue trackers.

Also Read: AI Chatbots vs AI Agents

Let me explain the core engine in simple language.

Agents write small functions, clean up code smells, extract helpers, and align style with your standards. They can split large files, remove dead code, or modernize syntax.

Agents scan diffs and critical paths, then propose tests that match your framework. They run tests, read the failures, and adjust. Over time, coverage improves with minimal effort from developers.

Agents leave precise comments, fix naming, suggest missing checks, and even push minor corrections to a branch. They also tidy commit history and add context to PR descriptions.

Given a stack trace and logs, the agent locates likely files, drafts a minimal, reproducible example when possible, and opens a clear issue for a human to review.

Agents detect known CVEs, recommend safe upgrades, run tests, and update lock files. They can also add temporary mitigations, accompanied by proper notes.

Agents sync README changes, update CHANGELOG entries, and insert inline comments near tricky code. After merges, they can produce short release notes and tag versions.

Agents add missing workflows, fix broken pipelines, and configure checks for different branches. They can set up Dockerfiles, Compose files, or simple infrastructure templates for development environments.

1. Faster cycle time and higher PR throughput. Small tasks stop clogging the queue.

2. Better test coverage and lower defect escape. Agents look for missed paths and add tests while developers focus on logic.

3. Less developer toil. Repetitive work moves to the agent, which improves morale and focus.

4. Standardized coding patterns. The same rules apply across teams.

5. 24/7 task handling during off-hours. Nightly runs keep the repo tidy and secure.

Coding Agent

Test Generation Agent

Code Review Agent

Security Fix Agent

Docs Agent

Choosing a partner is as important as selecting the tools. The right AI agents development company brings architectural skill, strong security habits, and a proven rollout approach. Here is a clear checklist.

Security is not optional. Establish strict rules from the outset and adhere to them. Here is a simple frame that works.

The field moves fast, but the direction is visible and useful for planning.

Expect agents that specialize. One focuses on tests, another on docs, another on release steps. They share context through a common memory layer and hand tasks to each other.

Policies will define what an agent can do in each repository. For example, full autonomy in a documentation repository, partial autonomy in a library, and strict human oversight in a payments service.

Agents will feel like native features. You will see inline task summaries, one-click approvals, and shared insights across your IDE and pipeline screens.

Memory systems will index code histories, design documents, and decisions, so agents can recall why a rule was added two years ago and avoid inadvertently breaking it today.

AI agents for software development bring real, measurable help to modern teams. They write small pieces of code, add tests, fix minor issues, prepare releases, and maintain a clean repository. They do not replace thoughtful engineering. They reduce busy work and raise the bar of quality, allowing your people to focus on product outcomes.

If you plan a rollout, keep two rules close. Begin with narrow tasks where success is clearly defined. Keep strict guardrails and full logs from day one. This balance fosters trust and yields result without drama.

If you are considering a partner, choose an AI agent development company with proven AI development services and live references. Ask about security, data handling, on-prem options, and practical SLAs. The best partners will show you a small pilot first and let the numbers speak for themselves.

At Webmob Software Solutions, we help global teams design, implement, and support AI agents in software development across real stacks. We focus on safeguardrails, steady integrations, and outcomes you can measure. Whether you want assistive agents inside the IDE or autonomous software agents that handle nightly chores, we can help scope a pilot and grow it the right way.

Looking to start now with a clear plan and less risk? Let’s talk about your use cases and stack. We will help you select the right first tasks and deliver a small win that proves its value.

They are software systems that take goals, plan steps, call developer tools, and act on your code with guardrails. They help with coding tasks, testing, docs, and release chores.

They reduce cycle time on repeat tasks, improve test coverage, maintain healthy dependencies, and standardize style. Engineers spend less effort on chores and more on product logic.

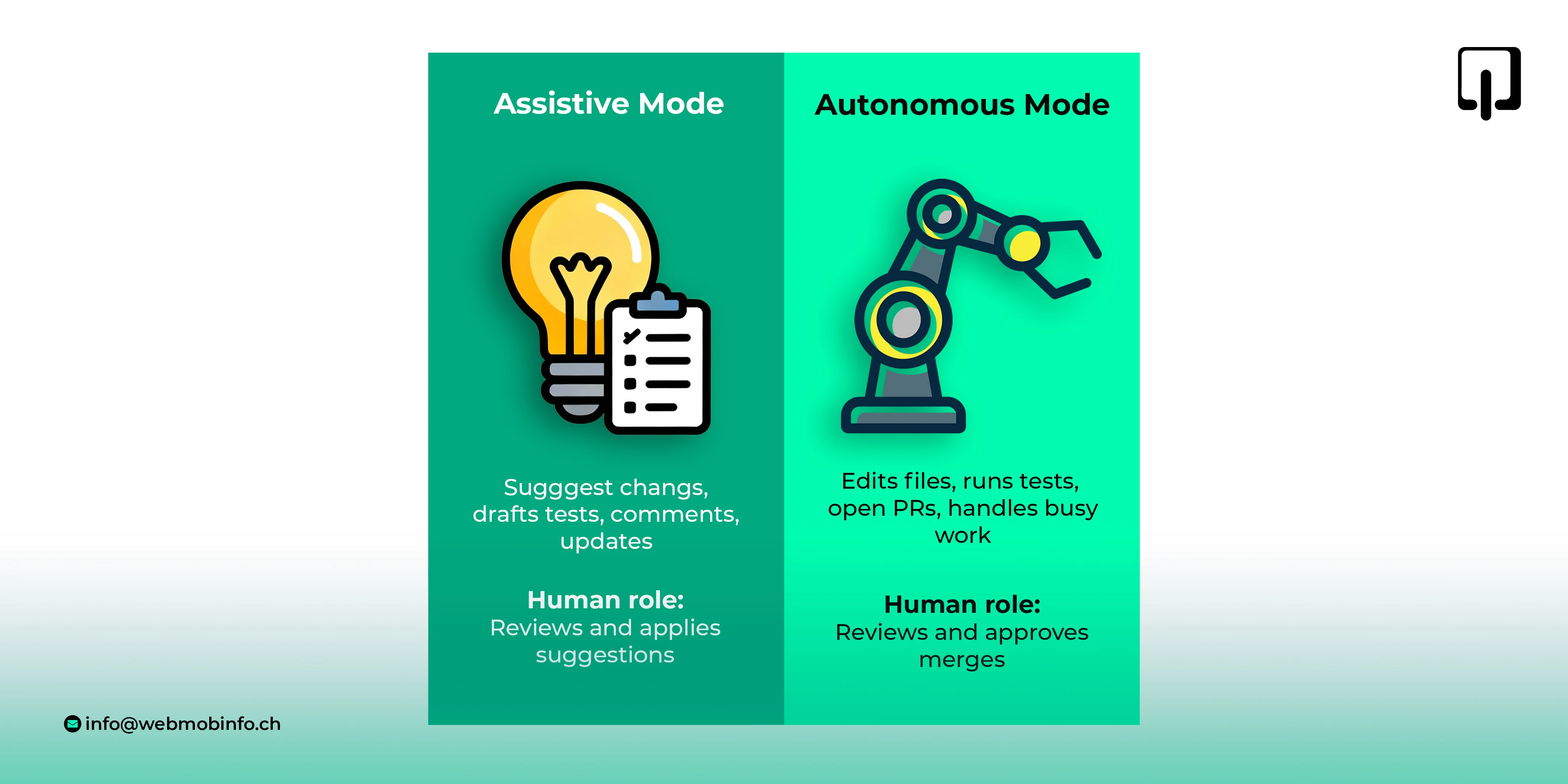

Yes. In assistive mode, they suggest edits and comments. In autonomous mode, they can branch, edit, run tests, and open PRs. Teams keep human reviews for merges.

Traditional automation follows fixed scripts. Agents reason about goals, adapt plans after reading outputs, and perform multi-step actions with feedback. They behave more like a teammate than a script.

Look for agents that integrate with your IDE, CI, and repo. Focus on reliability, logging, guardrails, and task depth. The “top” choice depends on your stack, security needs, and where you want results first.

Start small. Pick one or two low-risk tasks like test generation or doc updates. Set clear logs, policies, and approval steps. Measure cycle time, coverage, and PR throughput. Then expand to more tasks and repos.

Copyright © 2025 Webmob Software Solutions